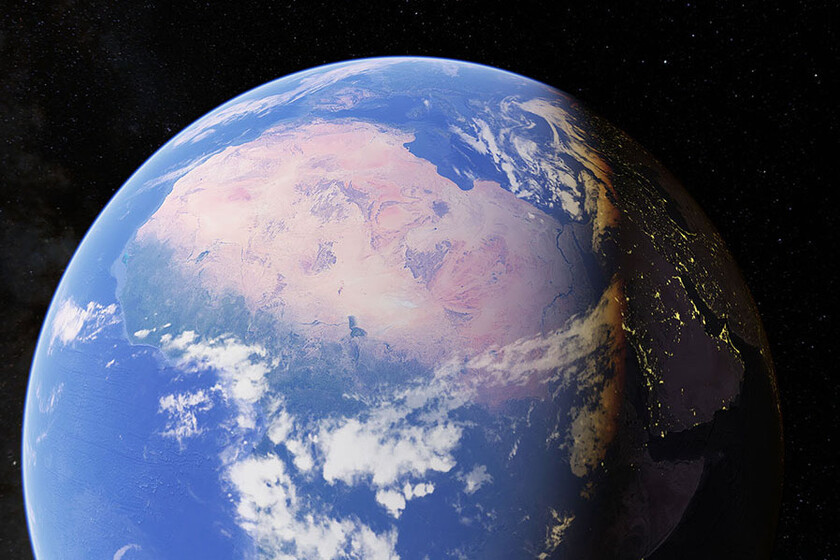

When we talk about deepfakes Generally, videos come to mind where famous faces are placed, what the subjects say are modified or directly create advertisements. But there is more, now a group of researchers has shown that can also be used to create fake maps.

As detailed by a team of researchers from the University of Washington, it is possible to generate satellite images deepfake using artificial intelligence. Generating your own deepfakeS, the researchers now hope to create a more powerful tool to detect the deepfakes in satellite images.

As indicated, they could apply buildings, structures and vegetation from different places to a given place. For example, placing buildings in Madrid and vegetation in Lugo in Cadiz. In their tests, they applied false vegetation from Seattle and buildings from Beijing to a map of the city of Tacoma in the United States.

The value of satellite deepfakes

Although these maps can be useful to understand, for example, what a place was like in the past or how it would be transformed if certain changes are made … often their use is not that. According to the researchers, the deepfakes in satellite images can be used to misinform.

It is nothing new, for millennia maps have been faked and modified to fool the enemy. However, when you see satellite images you think they are real because, after all, they are photographs of the place and not interpretations of a cartographer. It doesn’t have to be this way, and deepfakes from satellite images are the proof.

They believe that if an intruder accesses satellite images of military equipment, for example, they can add false structures such as bridges to trap the enemy. Important buildings and targets could also be hidden from the enemy as long as they are not discovered.

To make these fake satellite images, the team of researchers used GAN networks. Using this type of confrontational AI is relatively easy to create new content and is what is used, for example, to create fake faces. Now, by reverse engineering, they hope to create tools capable of easily detecting this new type of deepfake.

Vía | University of Washington